Consumer Focus: AI’s Political Paradox – When Conservatives Cheer for the AI “Muse” and Liberals Hit Pause

Everyone expects AI to divide us—we explore why conservatives might call AI an inspiration, while liberals are reaching for the emergency brake.

In the arena of American politics, you might expect debates about artificial intelligence to split along familiar lines: perhaps tech optimism among progressive futurists versus caution among conservative traditionalists. Think again. Our pilot consumer study on AI perception uncovered a surprising political divide: self-identified conservatives in our sample were often more optimistic and excited about AI than liberals were. In fact, conservatives were more inclined to see AI not as a threat, but as something almost inspirational – even viewing AI as a “Muse” or creative partner – whereas liberals tended to express greater concern about AI’s risks. It’s a political role reversal that upends stereotypes and offers a fresh perspective on the cultural narrative around technology.

Figure 1: The Effect of Political View on AI Excitement

Note: The regression results show a positive and significant relationship between conservative political view and excitement about the recent AI development (b = .28, p < .001). In other words, the more conservative a person is, the more excited and positive they view the recent AI development.

Thanks for reading! Subscribe for free to receive new posts and support my work.

Consider this head-scratcher: on key questions like “Does AI’s benefit outweigh its risks?”, conservatives scored notably higher in favor of AI’s benefits. They were also significantly more likely to imagine AI in positive, human-like roles – the helpful smith crafting solutions, the oracle providing knowledge, the muse sparking creativity. Liberals, on the other hand, were more reserved and skeptical in many of these areas (and although our data didn’t show liberals preferring negative metaphors outright, their relative caution was clear). This runs contrary to the common assumption that left-leaning folks are uniformly pro-science/tech and right-leaning folks are wary of high-tech social disruption. Reality is more nuanced. In our study, a young Republican might gush about ChatGPT’s potential, while a left-leaning independent worries about AI’s biases and unchecked power. It’s as if the classic optimist/pessimist hats have been swapped.

Why would conservatives embrace AI as a boon? One interpretation is that conservatives often emphasize business innovation and economic growth. AI, as the next big industrial revolution, promises efficiency and new markets. A conservative-minded respondent might see AI as a tool to boost productivity and competitiveness, aligning with pro-market values. Indeed, our conservative respondents were more likely to agree that AI will create value and opportunities, rather than destroy them. They even saw AI in almost romantic terms: the “Muse” metaphor – viewing AI as a source of inspiration – had much stronger resonance on the right than the left. Perhaps this reflects an enthusiasm for human-AI collaboration – the idea that a creative person plus a clever AI can achieve great things (painting a bit of a Norman Rockwell meets The Jetsons picture of harmonious progress).

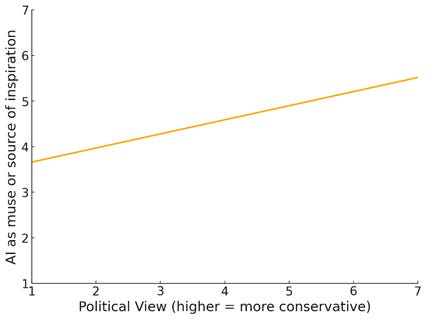

Figure 2: The Effect of Political View on the Perception of Muse

Note: The regression results reveal a positive and significant association between conservative political views and perceiving AI as a creative muse (b = .39, p < .001). In other words, individuals with more conservative beliefs are more likely to see AI not only as capable of collaborating with humans on creative projects, but also as possessing independent creative abilities.

Liberals, by contrast, may be drawing on a different set of concerns that temper their optimism. Left-leaning discourse around AI often highlights issues of ethics, inequality, and corporate power. Who controls the algorithms? Will AI perpetuate biases or increase inequality? These are questions frequently raised by liberal policymakers and activists. It wasn’t surprising, then, that in our data liberals showed more caution – they might not be anti-AI per se, but they’re less likely to give it a blank check of confidence. They want guardrails. Interestingly, this mirrors some real-world events: for example, some progressive voices have called for regulations on AI to prevent harms (bias, job loss, etc.), while more conservative voices (not all, but some) argue against heavy regulation to let innovation flourish. Our findings capture a similar spirit at the individual level.

The implications of this political perception gap are far-reaching. As AI technology races ahead, public policy and adoption will be influenced by how different groups view it. We’re used to tech issues becoming politicized (think climate science or stem cell research in the past) in ways where the right often casts doubt on scientific consensus and the left defends it. But with AI, both sides acknowledge the tech is powerful – the debate is over how to handle that power. Here, some on the right are essentially saying “full steam ahead, the opportunities outweigh the risks,” while voices on the left say “hold on, let’s make sure we don’t cause collateral damage.” Notably, this isn’t universal – there are certainly tech enthusiasts among liberals and AI-wary folks among conservatives – but the trend in our data was strong enough to turn heads.

One particularly counterintuitive nugget: conservatives in our study were much more likely to agree with the statement that “AI can be a creative muse for humans,” embracing a somewhat whimsical, hopeful view of human-AI partnership. It’s counterintuitive because creativity and artistic pursuits aren’t traditionally politicized, yet here we have a political correlate in seeing AI as inspiring. Perhaps conservatives, often caricatured as resistant to change, are in this case enchanted by the novelty and utility of a cutting-edge tool. Meanwhile, liberals—often seen as champions of progressive change—appear more hesitant about this particular change, maybe because it’s driven largely by big tech companies and could exacerbate social issues they care about (like inequality or bias).

There’s also a socio-cultural element. Conservatives might be less exposed to some of the critical academic discourse on AI ethics (which often comes out of universities and international organizations that skew progressive). Instead, they might be hearing success stories of AI in business and military (areas where a can-do attitude prevails). Liberals, conversely, may be influenced by cautionary tales – they’re reading the New York Times op-eds about AI’s dangers, discussing ethics in tech forums, etc. So each group’s information diet around AI could differ, reinforcing their leanings. Indeed, recent surveys by Pew and others have found differences in trust in tech companies and support for tech regulation along partisan lines, aligning with the patterns we observed.

The finding that “who loves or fears AI” can correlate with political ideology is a reminder that technology doesn’t exist in a vacuum. It gets absorbed into our existing worldviews. For communicators and leaders in the AI space, this means messages about AI might need tailoring. If you’re speaking to a business-friendly or conservative audience, emphasizing the creative and economic upsides of AI will resonate – they’re already inclined to see the machine as a Muse and money-maker. On the other hand, with more liberal or skeptical audiences, it’s crucial to address the risks and ethics upfront – acknowledge their concerns about fairness, transparency, and impacts on society before touting the benefits. Bridging this divide is important. We wouldn’t want AI to become a partisan football where one side’s overzealousness leads to avoidable errors, or the other side’s fears lead to missed opportunities.

Ultimately, understanding this paradoxical divide can help us find common ground. Both conservatives and liberals in our study, despite differences, agreed on one thing: AI is important and here to stay (virtually no one said “AI doesn’t matter”). That’s a start. From there, perhaps a balanced narrative can emerge – one that satisfies the right’s optimism by actively pursuing AI’s benefits, while heeding the left’s caution by putting ethical safeguards in place. If we do it right, we won’t have to choose between being cheerleaders or critics. Like a good bipartisan handshake, we can approach AI with pragmatic hope – welcoming innovation with eyes open to its societal context. And maybe that’s not paradoxical at all, but just plain common sense.

Appendix: Pilot Consumer Study on AI

How do people really feel about artificial intelligence—hopeful, curious, uneasy, or all of the above? Our new study dives into the everyday realities of generative AI, capturing how individuals use tools like ChatGPT and Midjourney, what excites them, and what worries them. From creativity and productivity to job disruption and privacy concerns, the survey explores the full spectrum of public opinion on AI’s growing role in society. We examine not only how people are using AI today, but also what they believe it means for the future of work, relationships, education, and more. Whether AI is seen as a helper, a rival, or something in between, this study offers a window into how regular U.S. consumers are navigating the AI revolution.